[Blog] New in Confluent Cloud: Queues for Kafka, new migration tooling, & more | Read Now

Author: Guozhang Wang

Why ZooKeeper Was Replaced with KRaft – The Log of All Logs

Why replace ZooKeeper with an internal log for Apache Kafka® metadata management? This post explores the rationale behind the replacement, examines why a quorum-based consensus protocol like Raft was utilized […]

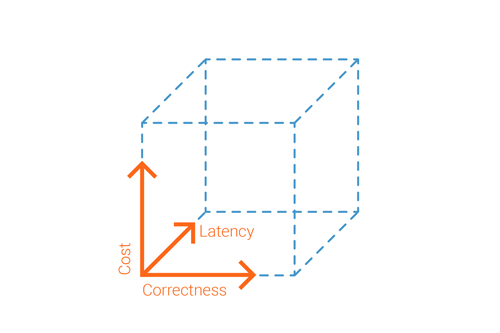

4 Key Design Principles and Guarantees of Streaming Databases

Classic relational database management systems (RDBMS) distribute and organize data in a relatively static storage layer. When queries are requested, they compute on the stored data and then return results […]

Consistency and Completeness: Rethinking Distributed Stream Processing in Apache Kafka

Stream processing has become an important part of the big data landscape, a new programming paradigm bringing asynchronous, long-lived computations to unbounded data in motion. But many people still think […]

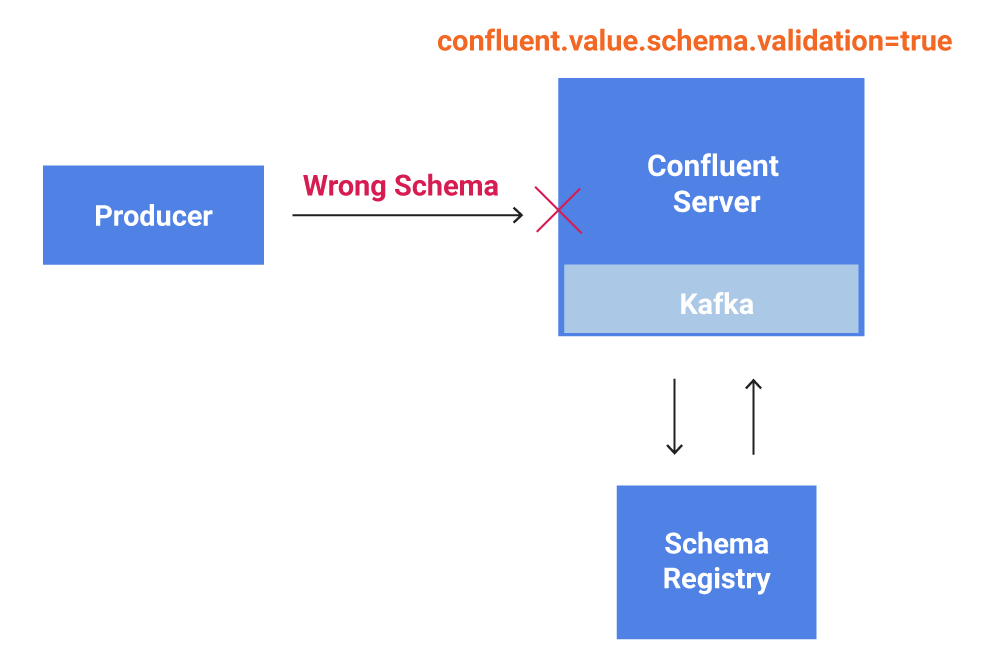

Schema Validation with Confluent Platform 5.4

Robust data governance support through Schema Validation on write is now supported in Confluent Platform 5.4. Schema Validation enables the broker to verify that data produced to an Apache Kafka® […]

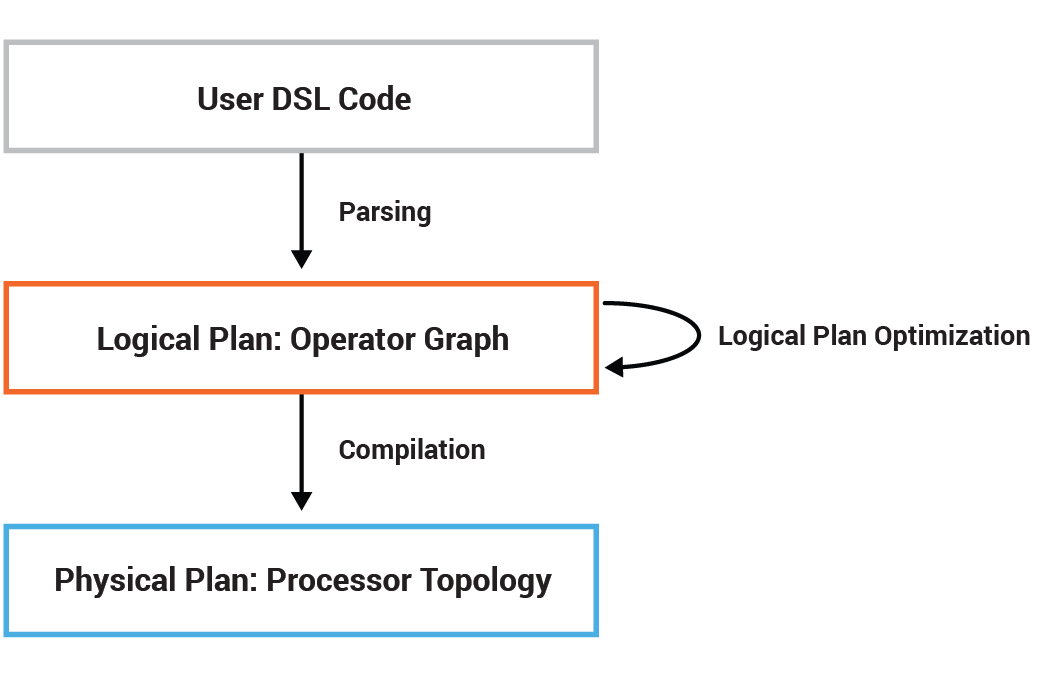

Optimizing Kafka Streams Applications

With the release of Apache Kafka® 2.1.0, Kafka Streams introduced the processor topology optimization framework at the Kafka Streams DSL layer. This framework opens the door for various optimization techniques […]

Streams and Tables: Two Sides of the Same Coin

We are happy to announce that our paper Streams and Tables: Two Sides of the Same Coin is published and available for free download. The paper was presented at the […]

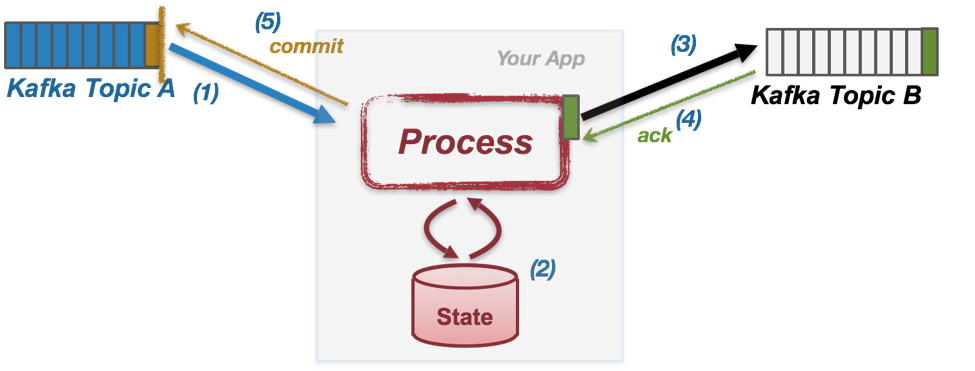

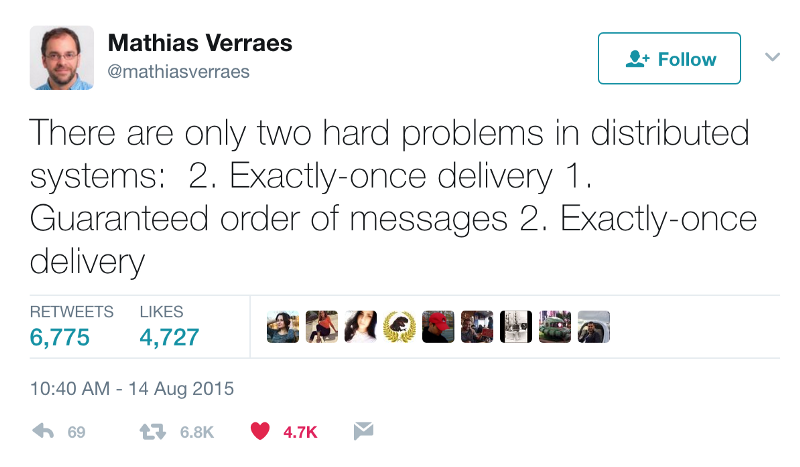

Enabling Exactly-Once in Kafka Streams

This blog post is the third and last in a series about the exactly-once semantics for Apache Kafka®. See Exactly-once Semantics are Possible: Here’s How Kafka Does it for the […]

Exactly-Once Semantics Are Possible: Here’s How Kafka Does It

I’m thrilled that we have hit an exciting milestone the Apache Kafka® community has long been waiting for: we have introduced exactly-once semantics in Kafka in the 0.11 release and […]

Log Compaction | Highlights in the Apache Kafka and Stream Processing Community | July 2016

Here comes the July 2016 edition of Log Compaction, a monthly digest of highlights in the Apache Kafka and stream processing community. Want to share some exciting news on this […]

Log Compaction | Highlights in the Apache Kafka and Stream Processing Community | June 2016

After months of testing efforts and seven voting rounds, Apache Kafka 0.10.0 and the corresponding Confluent Platform 3.0 have been finally released, cheers!

Confluent at VLDB 2015 | Building a Replicated Logging System with Apache Kafka

There has been much renewed interest in using log-centric architectures to scale distributed systems that provide efficient durability and high availability. In this approach, a collection of distributed servers can […]