[Webinar] From Fire Drills to Zero-Loss Resilience | Register Now

Apache Kafka

Data Products, Data Contracts, and Change Data Capture

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

Unleash Real-Time Agentic AI: Introducing Streaming Agents on Confluent Cloud

Build event-driven agents on Apache Flink® with Streaming Agents on Confluent Cloud—fresh context, MCP tool calling, real-time embeddings, and enterprise governance.

What’s New in Apache Kafka 2.4

On behalf of the Apache Kafka® community, it is my pleasure to announce the release of Apache Kafka 2.4.0. This release includes a number of key new features and improvements […]

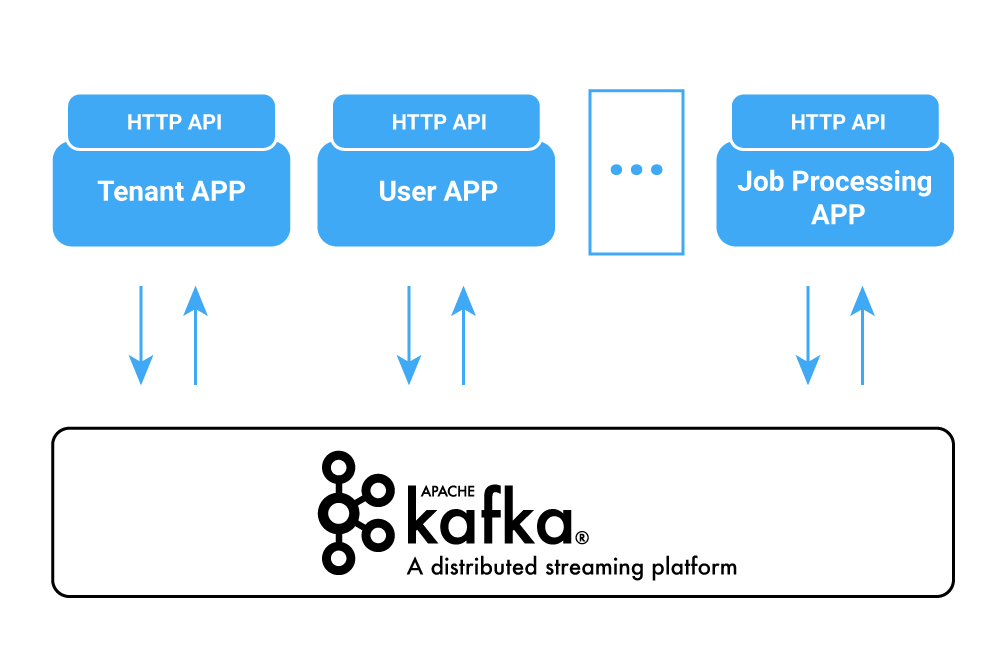

Integrating Apache Kafka With Python Asyncio Web Applications

Modern Python has very good support for cooperative multitasking. Coroutines were first added to the language in version 2.5 with PEP 342 and their use is becoming mainstream following the […]

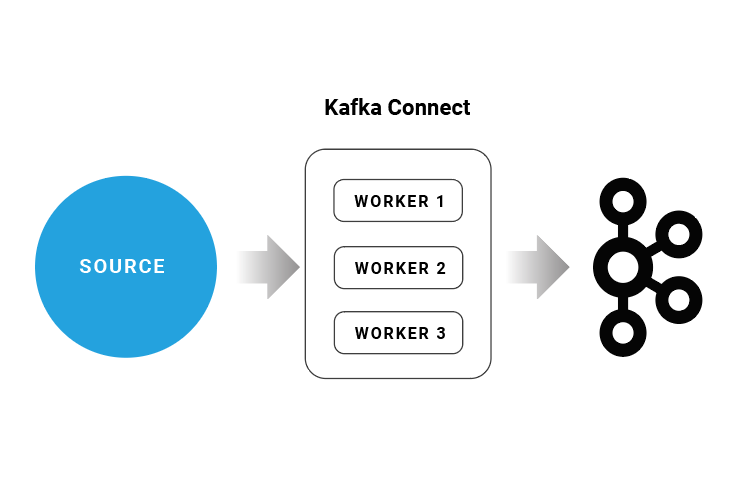

How to Use Single Message Transforms in Kafka Connect

Kafka Connect is the part of Apache Kafka® that provides reliable, scalable, distributed streaming integration between Apache Kafka and other systems. Kafka Connect has connectors for many, many systems, and […]

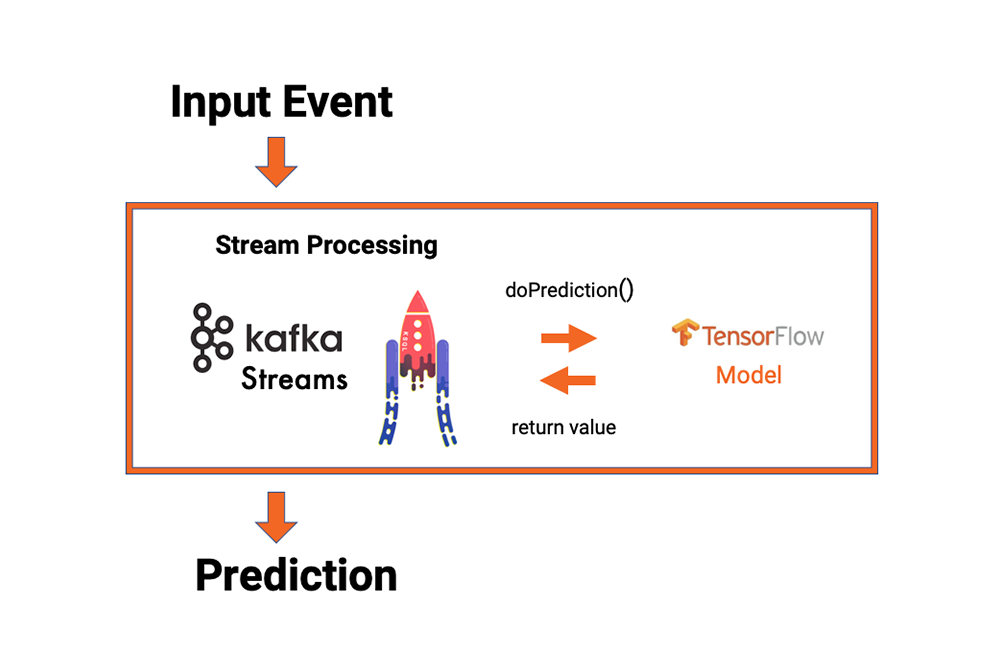

Machine Learning and Real-Time Analytics in Apache Kafka Applications

The relationship between Apache Kafka® and machine learning (ML) is an interesting one that I’ve written about quite a bit in How to Build and Deploy Scalable Machine Learning in […]

Getting Started with Rust and Apache Kafka

I’ve written an event sourcing bank simulation in Clojure (a lisp build for Java virtual machines or JVMs) called open-bank-mark, which you are welcome to read about in my previous […]

4 Steps to Creating Apache Kafka Connectors with the Kafka Connect API

If you’ve worked with the Apache Kafka® and Confluent ecosystem before, chances are you’ve used a Kafka Connect connector to stream data into Kafka or stream data out of it. […]

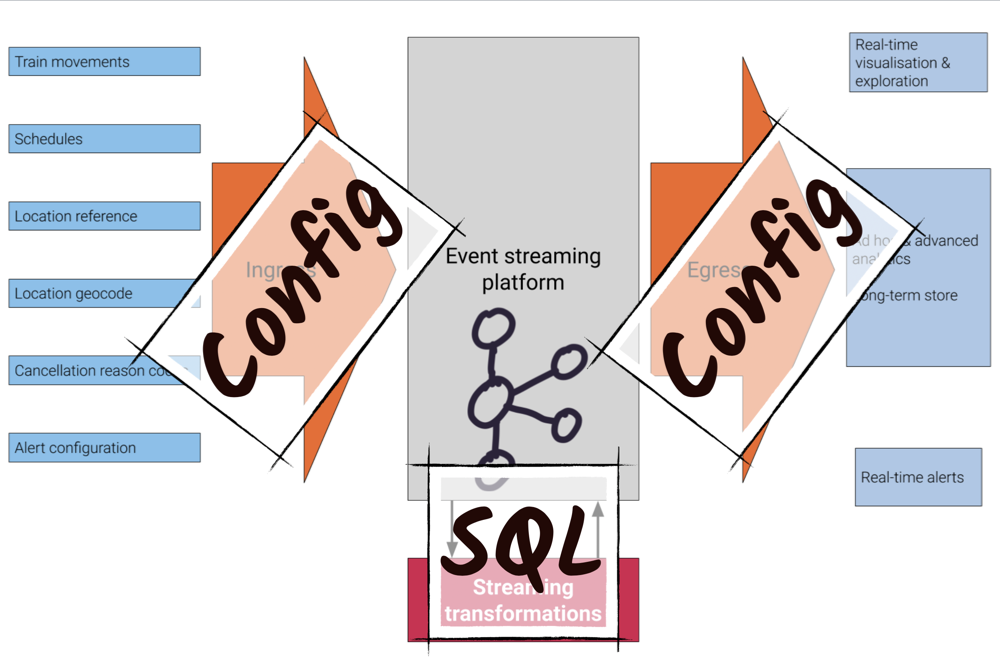

🚂 On Track with Apache Kafka – Building a Streaming ETL Solution with Rail Data

Trains are an excellent source of streaming data—their movements around the network are an unbounded series of events. Using this data, Apache Kafka® and Confluent Platform can provide the foundations […]

Internet of Things (IoT) and Event Streaming at Scale with Apache Kafka and MQTT

The Internet of Things (IoT) is getting more and more traction as valuable use cases come to light. A key challenge, however, is integrating devices and machines to process the […]

How to Run Apache Kafka with Spring Boot on Pivotal Application Service (PAS)

This tutorial describes how to set up a sample Spring Boot application in Pivotal Application Service (PAS), which consumes and produces events to an Apache Kafka® cluster running in Pivotal […]

Real-Time Analytics and Monitoring Dashboards with Apache Kafka and Rockset

In the early days, many companies simply used Apache Kafka® for data ingestion into Hadoop or another data lake. However, Apache Kafka is more than just messaging. The significant difference […]

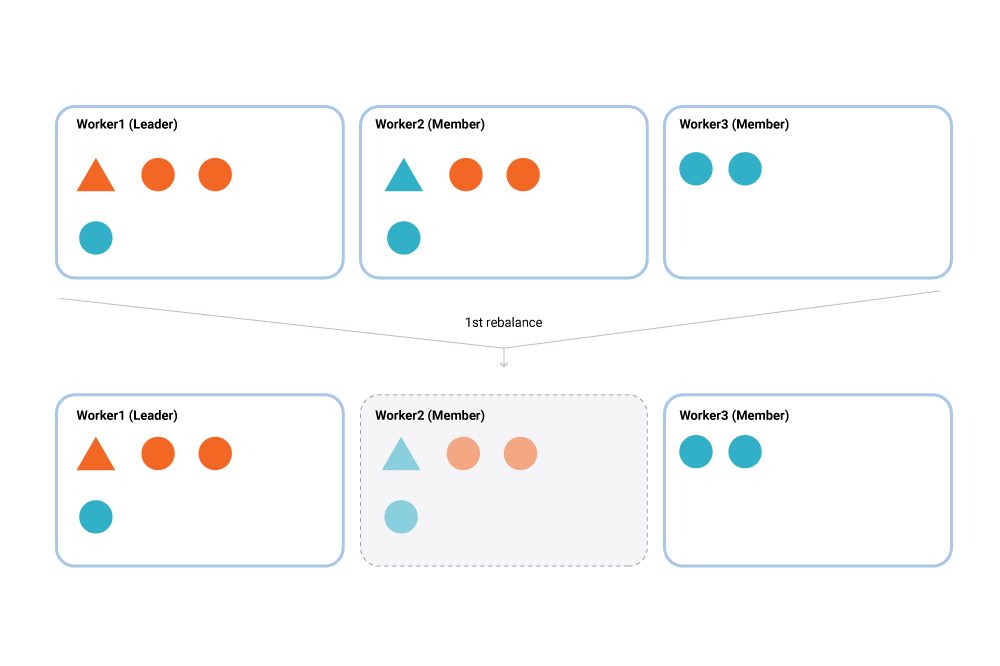

Incremental Cooperative Rebalancing in Apache Kafka: Why Stop the World When You Can Change It?

There is a coming and a going / A parting and often no—meeting again. —Franz Kafka, 1897 Load balancing and scheduling are at the heart of every distributed system, and […]

Building Transactional Systems Using Apache Kafka

Traditional relational database systems are ubiquitous in software systems. They are surrounded by a strong ecosystem of tools, such as object-relational mappers and schema migration helpers. Relational databases also provide […]

Kafka Connect Improvements in Apache Kafka 2.3

With the release of Apache Kafka® 2.3 and Confluent Platform 5.3 came several substantial improvements to the already awesome Kafka Connect. Not sure what Kafka Connect is or need convincing […]

Announcing Tutorials for Apache Kafka

We’re excited to announce Tutorials for Apache Kafka®, a new area of our website for learning event streaming. Kafka Tutorials is a collection of common event streaming use cases, with […]