[Blog] New in Confluent Cloud: Queues for Kafka, new migration tooling, & more | Read Now

Author: Yeva Byzek

4 Must-Have Tests for Your Apache Kafka CI/CD with GitHub Actions

Millions of developers code on GitHub, and if you’re one of those developers using it to create Apache Kafka® applications—for which there are 70,000+ Kafka-related repositories—it is natural to integrate […]

Applying Data Pipeline Principles in Practice: Exploring the Kafka Summit Keynote Demo

Real-time data streaming necessitates a new way to think about modern data system design. In the keynote at Kafka Summit London 2022, Confluent CEO Jay Kreps described five principles of […]

How to Make Apache Kafka Clients Go Fast(er) on Confluent Cloud

Imagine your team wants to design a data streaming architecture and you’re in charge of creating the prototype. Within a few minutes, you provision a fully managed Apache Kafka® cluster […]

42 Things You Can Stop Doing Once ZooKeeper Is Gone from Apache Kafka

Soon, Apache Kafka® will no longer need ZooKeeper! With KIP-500, Kafka will include its own built-in consensus layer, removing the ZooKeeper dependency altogether. The next big milestone in this effort […]

Ensure Data Quality and Data Evolvability with a Secured Schema Registry

Organizations define standards and policies around the usage of data to ensure the following: Data quality: Data streams follow the defined data standards as represented in schemas Data evolvability: Schemas […]

Preparing Your Clients and Tools for KIP-500: ZooKeeper Removal from Apache Kafka

As described in the blog post Apache Kafka® Needs No Keeper: Removing the Apache ZooKeeper Dependency, when KIP-500 lands next year, Apache Kafka will replace its usage of Apache ZooKeeper […]

Creating a Serverless Environment for Testing Your Apache Kafka Applications

If you are taking your first steps with Apache Kafka®, looking at a test environment for your client application, or building a Kafka demo, there are two “easy button” paths […]

Streaming Heterogeneous Databases with Kafka Connect – The Easy Way

Building a Cloud ETL Pipeline on Confluent Cloud shows you how to build and deploy a data pipeline entirely in the cloud. However, not all databases can be in the […]

Building a Cloud ETL Pipeline on Confluent Cloud

As enterprises move more and more of their applications to the cloud, they are also moving their on-prem ETL pipelines to the cloud, as well as building new ones. There […]

Shoulder Surfers Beware: Confluent Now Provides Cross-Platform Secret Protection

Compliance requirements often dictate that services should not store secrets as cleartext in files. These secrets may include passwords, such as the values for ssl.key.password, ssl.keystore.password, and ssl.truststore.password configuration parameters […]

17 Ways to Mess Up Self-Managed Schema Registry

Part 1 of this blog series by Gwen Shapira explained the benefits of schemas, contracts between services, and compatibility checking for schema evolution. In particular, using Confluent Schema Registry makes […]

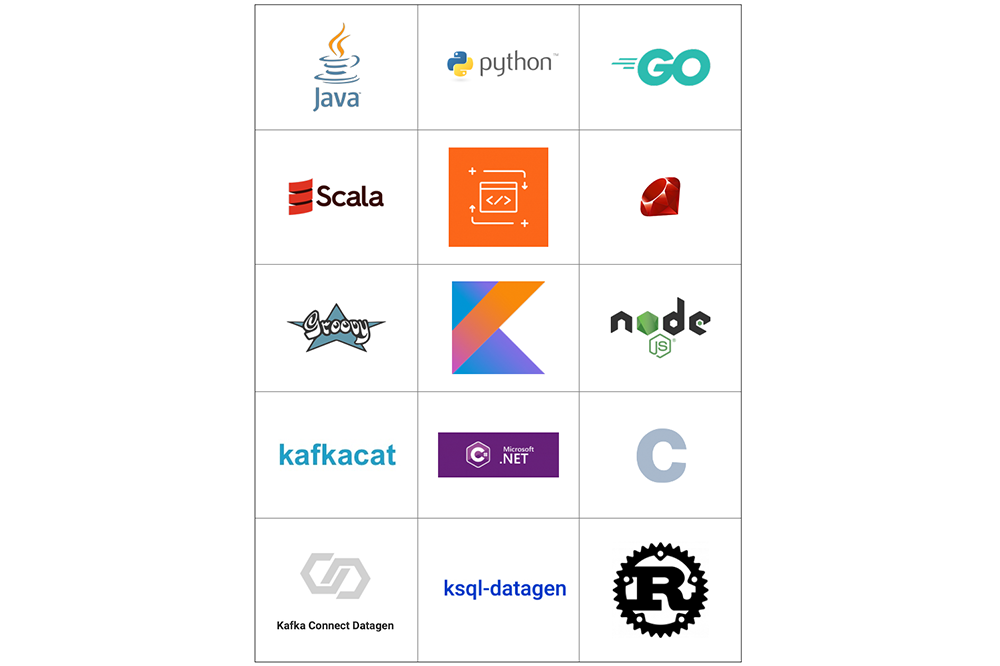

12 Programming Languages Walk into a Kafka Cluster…

When it was first created, Apache Kafka® had a client API for just Scala and Java. Since then, the Kafka client API has been developed for many other programming languages […]

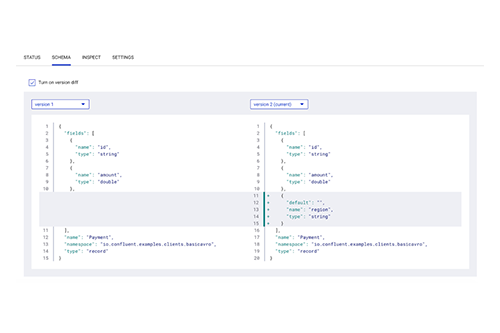

Dawn of Kafka DevOps: Managing and Evolving Schemas with Confluent Control Center

As we announced in Introducing Confluent Platform 5.2, the latest release introduces many new features that enable you to build contextual event-driven applications. In particular, the management and monitoring capabilities […]

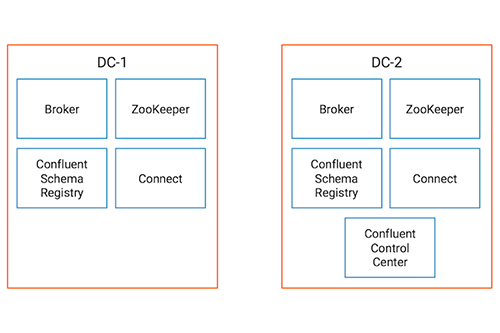

Monitoring Data Replication in Multi-Datacenter Apache Kafka Deployments

Enterprises run modern data systems and services across multiple cloud providers, private clouds and on-prem multi-datacenter deployments. Instead of having many point-to-point connections between sites, the Confluent Platform provides an […]