[Webinar] From Fire Drills to Zero-Loss Resilience | Register Now

Technology

Data Products, Data Contracts, and Change Data Capture

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

Unleash Real-Time Agentic AI: Introducing Streaming Agents on Confluent Cloud

Build event-driven agents on Apache Flink® with Streaming Agents on Confluent Cloud—fresh context, MCP tool calling, real-time embeddings, and enterprise governance.

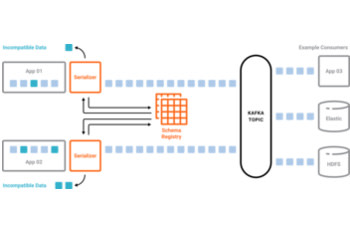

Decoupling Systems with Apache Kafka, Schema Registry and Avro

As your Apache Kafka® deployment starts to grow, the benefits of using a schema registry quickly become compelling. Confluent Schema Registry, which is included in the Confluent Platform, enables you […]

Introducing Confluent Platform 5.0

We are excited to announce the release of Confluent Platform 5.0, the enterprise streaming platform built on Apache Kafka®. At Confluent, our vision is to place a streaming platform at […]

Ansible Playbooks for Confluent Platform

Here at Confluent, we want to see each Confluent Platform installation be successful right from the start. That means going beyond beautiful APIs and spectacular stream processing features. We want […]

Apache Kafka vs. Enterprise Service Bus (ESB) – Friends, Enemies or Frenemies?

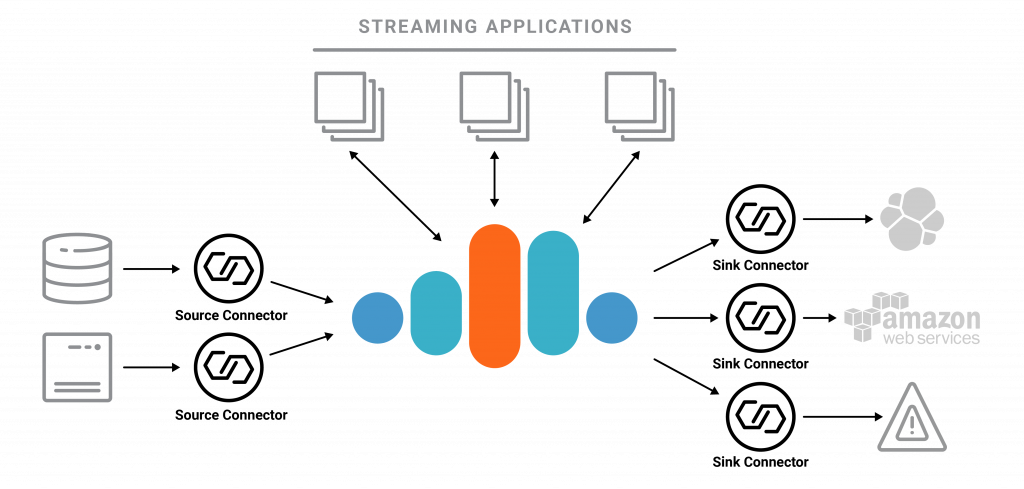

Typically, an enterprise service bus (ESB) or other integration solutions like extract-transform-load (ETL) tools have been used to try to decouple systems. However, the sheer number of connectors, as well […]

June Preview Release: Packing Confluent Platform with the Features You Requested!

We are very excited to announce Confluent Platform June 2018 Preview. This is our most feature-packed preview release for Confluent Platform since we started doing our monthly preview releases in […]

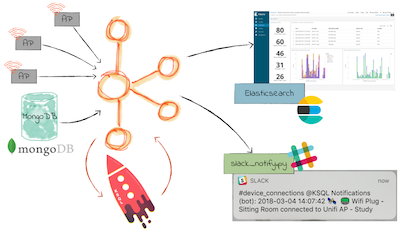

We ❤️ syslogs: Real-time syslog processing with Apache Kafka and KSQL – Part 3: Enriching events with external data

Using KSQL, the SQL streaming engine for Apache Kafka®, it’s straightforward to build streaming data pipelines that filter, aggregate, and enrich inbound data. The data could be from numerous sources, […]

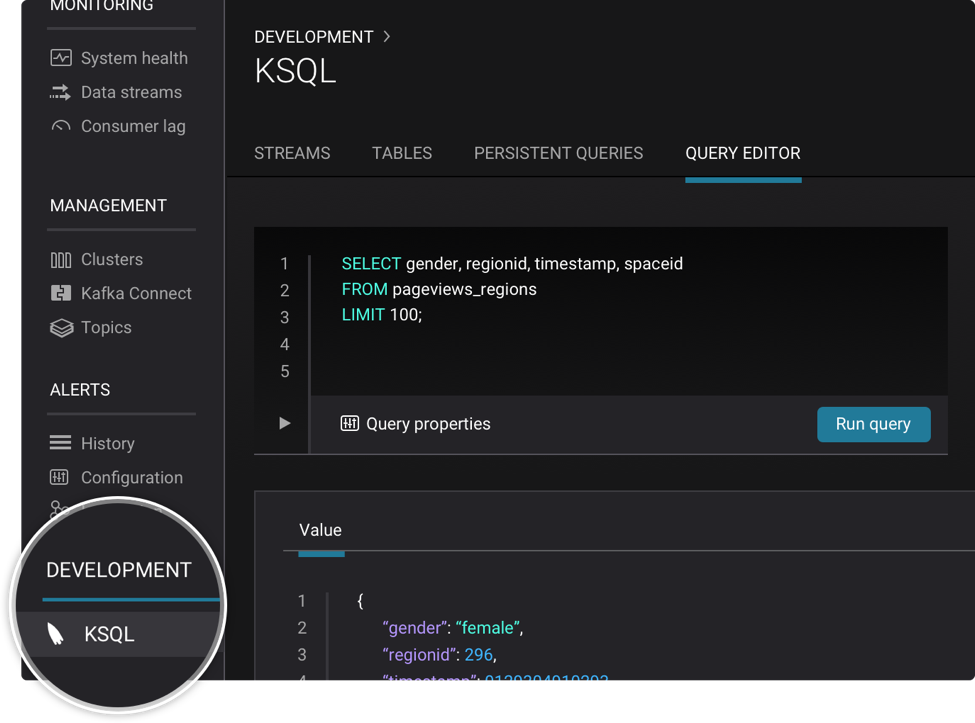

Stream Processing Made Easy With Confluent Cloud and KSQL

Confluent Cloud™ is a fully-managed streaming data service based on open-source Apache Kafka. With Confluent Cloud, developers can accelerate building mission-critical streaming applications based on a single source of truth. […]

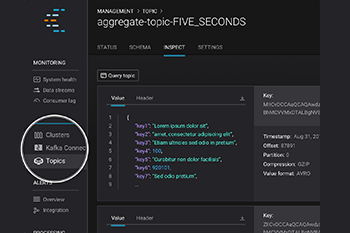

May Preview Release: Advancing KSQL and Schema Registry

We are very excited to announce the Confluent Platform May 2018 Preview release! The May Preview introduces powerful new capabilities for KSQL and the Schema Registry UI. Read on to […]

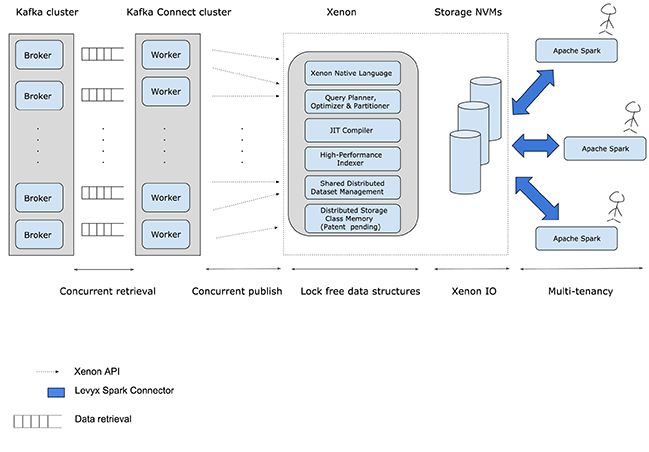

Analytics on Bare Metal: Xenon and Kafka Connect

The following post is a guest blog from Tushar Sudhakar Jee, Software Engineer, Levyx responsible for Kafka infrastructure. You may find this post also on Levyx’s blog. Abstract As part […]

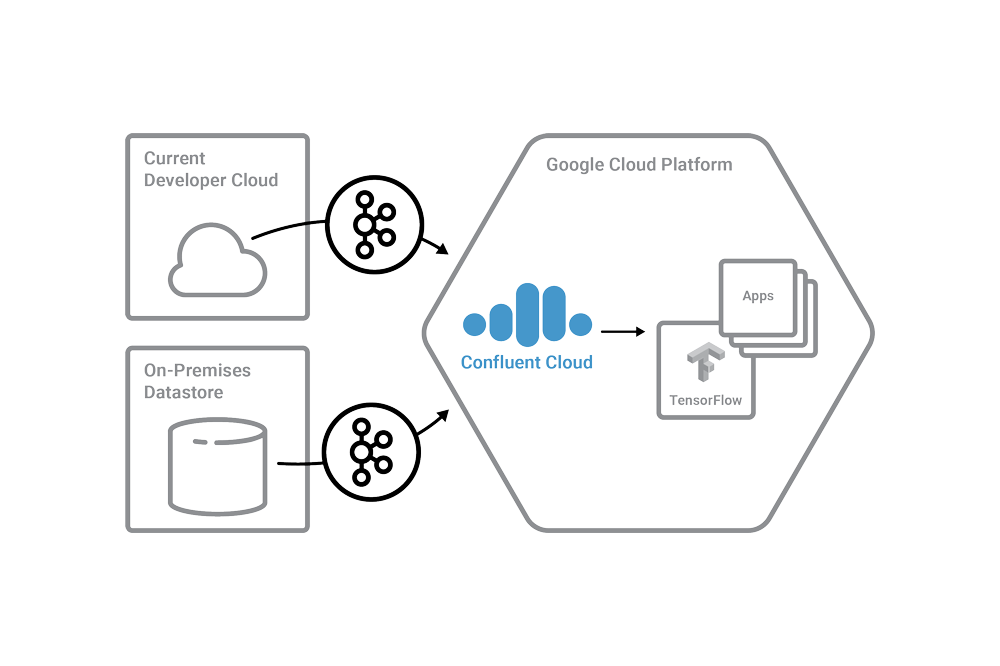

Introducing Self-Service Apache Kafka for Developers

New Confluent Cloud and Ecosystem Expansion to Google Cloud Platform The vision for Confluent Cloud is simple. We want to provide an event streaming service to support all customers on […]

Visualizations on Apache Kafka Made Easy with KSQL

We’re pleased to welcome our partner Arcadia Data to the Confluent blog today. Shant Hovsepian is the CTO and co-founder of Arcadia Data, and is going to tell us about […]

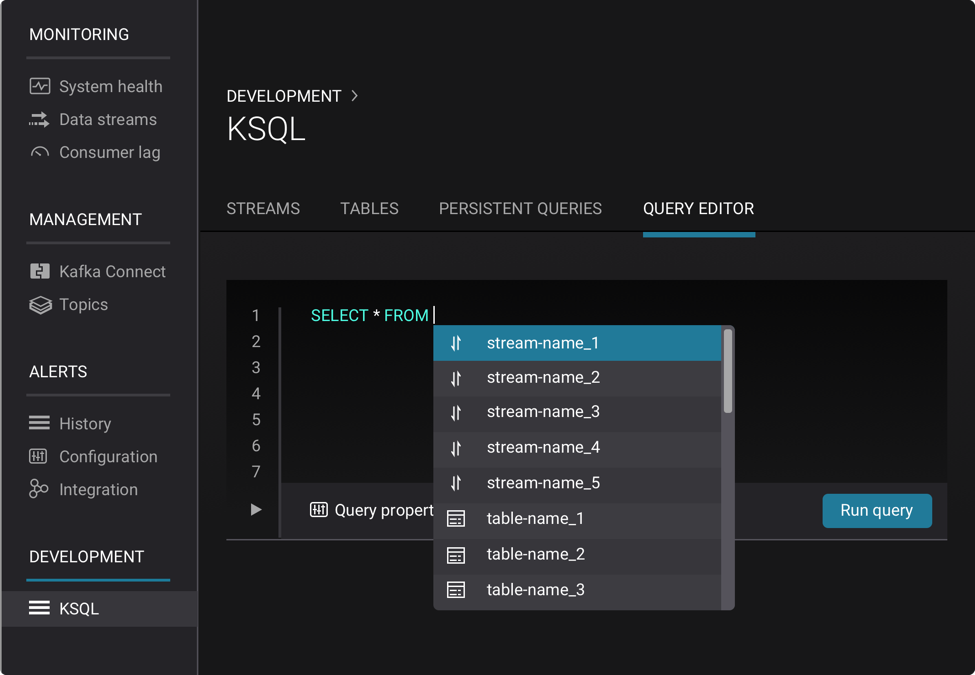

Level Up Your KSQL

Now that KSQL is available for production use as a part of the Confluent Platform, it has never been easier to run the open-source streaming SQL engine for Apache Kafka®. […]

Introducing the Confluent Operator: Apache Kafka on Kubernetes Made Simple

At Confluent, our mission is to put a Streaming Platform at the heart of every digital company in the world. This means, making it easy to deploy and use Apache […]

Introducing Confluent Platform Preview Releases

Download Confluent Platform preview release Historically, Confluent delivers a new release of Confluent Platform three times per year. While this cadence meets the needs of a meaningful portion of our […]