[Webinar] From Fire Drills to Zero-Loss Resilience | Register Now

Confluent Blog

Data Products, Data Contracts, and Change Data Capture

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

Unleash Real-Time Agentic AI: Introducing Streaming Agents on Confluent Cloud

Build event-driven agents on Apache Flink® with Streaming Agents on Confluent Cloud—fresh context, MCP tool calling, real-time embeddings, and enterprise governance.

Beyond Data: How a Sales Director Drives Real Impact for Customers

Confluent Champion blog post featuring Aamir Thoker

Best Practices for Validating Apache Kafka® Disaster Recovery and High Availability

Learn best practices for validating your Apache Kafka® disaster recovery and high availability strategies, using techniques like chaos testing, monitoring, and documented recovery playbooks.

How to Scale and Secure Kafka Connect in Production Environments

Learn best practices for running Kafka Connect in production—covering scaling, security, error handling, and monitoring to build resilient data integration pipelines.

The BI Lag Problem and How Event-Driven Workflows Solve It

Learn how to automate BI with real-time streaming. Explore event-driven workflows that deliver instant insights and close the gap between data and action.

How Does Real-Time Streaming Prevent Fraud in Banking and Payments?

Discover how banks and payment providers use Apache Kafka® streaming to detect and block fraud in real time. Learn patterns for anomaly detection, risk mitigation, and trusted automation.

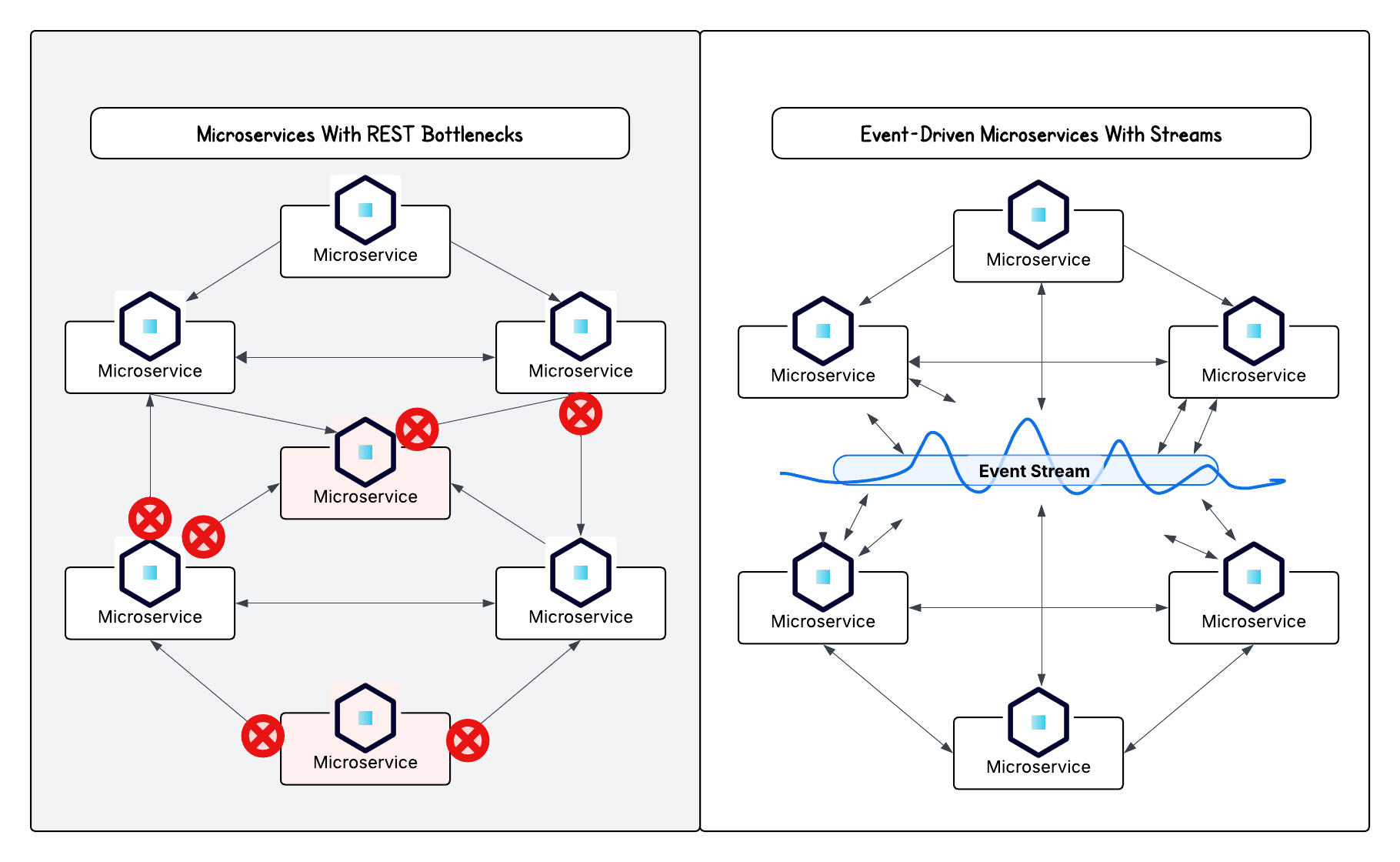

Do Microservices Need Event-Driven Architectures?

Discover why microservices architectures thrive with event-driven design and how streaming powers applications that are agile, resilient, and responsive in real time.

Making Data Quality Scalable With Real-Time Streaming Architectures

Learn how to validate and monitor data quality in real time with Apache Kafka® and Confluent. Prevent bad data from entering pipelines, improve trust in analytics, and power reliable business decisions.

How to Build Real-Time Alerts to Stay Ahead of Critical Events

Learn how to design real-time alerts with Apache Kafka® using alerting patterns, anomaly detection, and automated workflows for resilient responses to critical events.

Data Streaming: The Key to Tackling Data Challenges for AI Success

Explore common data management challenges and how data streaming helps overcome them—powering enterprise AI with real-time insights.

Powering Event-Driven, Multi-Agent AI: Confluent Named MongoDB Global Tech Partner of the Year

Confluent is honored to be named MongoDB’s 2025 Global Tech Partner of the Year. Together, we’re helping enterprises build intelligent, event-driven AI systems with real-time data streaming, vector search, and multi-agent orchestration.

Strengthen Security With TLS 1.3 for Confluent Cloud Clusters

Confluent Cloud is introducing TLS 1.3 for stronger security. It’s available now as an opt-in feature for Dedicated clusters. On April 30, 2026, TLS 1.3 will be enabled by default for all remaining cluster types. Confluent will continue to support TLS 1.2.

How to Build Real-Time Apache Kafka® Dashboards That Drive Action

Learn how to build real-time dashboards with Apache Kafka® that help your organization go beyond simple data visualization and analysis paralysis to instant analysis and action.

Why More Teams Are Starting With Confluent Cloud on AWS Marketplace

Build real-time apps in minutes. Confluent Cloud on AWS Marketplace offers fully managed Kafka with Flink, Iceberg, 120+ connectors, and enterprise-grade governance—so your team spends less time on ops and more on innovation.

Confluent Cloud for Government Achieves FedRAMP 20x Low Authorization

Confluent achieves FedRAMP Ready status for its Confluent Cloud for Government offering, marking an essential milestone in providing secure data streaming services to government agencies, and showing a commitment to rigorous security standards. This certification marks a key step towards full...